See why top ecommerce brands use Miva’s no-code platform to run

multiple stores, manage massive catalogs, and grow their revenue.

A/B testing, also called split-testing, is comparing two versions of a web page to see which one performs better. Two visitors can be looking at the same page and see different things on their respective pages. One visitor is looking at one variant (A) and the other is looking at the other variant (B). Basically, two people see two different websites.

Noah Kagan, founder of AppSumo, recently laid out the truths of A/B tests from his experience of doing hundreds of tests. The harsh reality: Only 1 out of 8 A/B tests have driven significant change.

When running A/B tests, it is critical to make sure that you wait until the test is complete and that your data is statistically significant. It is common for A/B tests to produce a great initial result on the first day, but then lose in the long run. Kagan warns, “Be aware of premature e-finalization.”

These numbers seem to be consistent with others in the industry, as well. Max Al Farakh, Co-founder of JitBit, declares they have found only 1 out of 10 A/B tests to produce any kind of results.

1. Do Weekly Iterations.

Before you even dive into a test, first, you need to determine your hypothesis. Ask yourself, “How do I think this change will improve my conversions?”

Things to keep in mind:

2. Be Patient & Persistent

There is a common misconception, when it comes to A/B tests, that they will always produce huge gains. The truth is that getting results may take a few thousand visits to find any kind of valuable information. In order to avoid waiting around for small improvements, pick bigger changes to test. With so many case studies online that show how changing a button color improved sales by 400%, it is no surprise that people are shocked when that is not the case. What these companies typically don’t showcase is that it took them multiple tests and changes to get that kind of result.

I have not failed 10,000 times. I have successfully found 10,000 ways that will not work.” – Thomas Alva Edison, Inventor of the Light Blub”

3. Change your expectations

When I talk about AB tests failing, I simply mean that there is not a significant lift in your conversions, as compared to your original design. Keep from thinking that a test failing is the same as your efforts failing. Expect that most of your tests will fail, and think of this is a good thing because every test that fails just gets you one step closer to one that works. It is all about setting the right expectations and viewing the tests as a learning experience.

As Lance Jones, CRO Consultant of Copy Hackers, writes, “Every test comes with lift: it’s negative, neutral, or positive. And every test comes with learning—you just have to have a good hypothesis going into the test.”

Going into it knowing that 7 out of 8 of your tests will produce insignificant improvements will likely prevent you from un-real expectations. Stick with it, and don’t give up after multiple insignificant results.

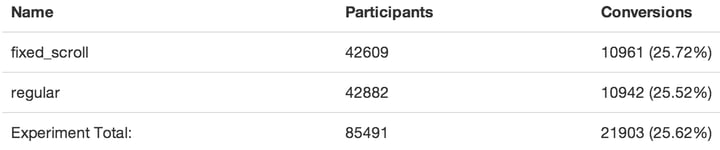

Even with all the right preparations in place, JitBit still saw no change in conversions for these A/B tests:

JitBit’s decided to make their call-to-action buttons fixed positioned so they would stay visible as the visitor scrolls the page. You can see it in action on the hosted help desk landing page. They were absolutely sure that it will convert better.

As you can see, the test had 85k participants and both alternatives have the exact same number of conversions. Insane.

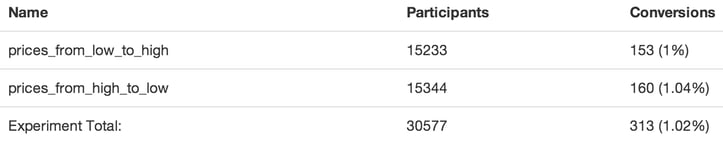

Another example: JitBit sorted the plans in our pricing tables to go from the most expensive to the cheapest and vice versa, and tracked the most expensive plan orders.

Again 30k participants and zero difference.

In case you are still thinking that changing the color of that call to action button will raise your conversions, consider this:

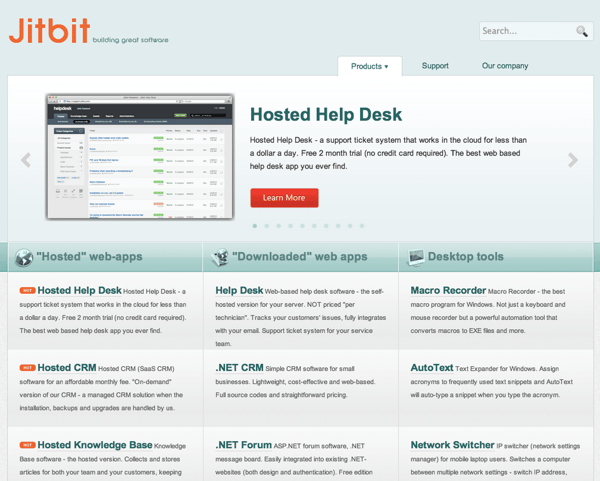

Even when JitBit radically redesigned their entire web-site from this:

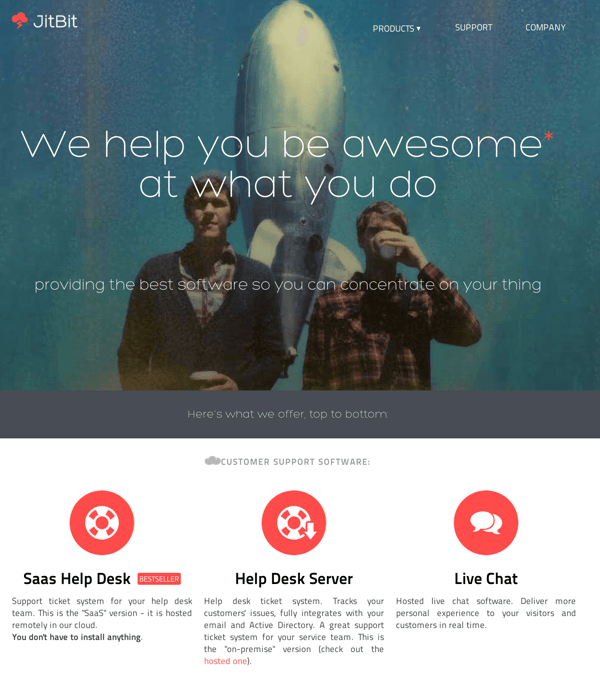

To this:

Again, they saw absolutely no changes in their conversions.

Absolutely not. Just because JitBit didn’t see a change in conversions, doesn’t mean the test was not valuable. In fact, the tests were actually incredibly valuable. They learned that these elements don’t make a difference to their specific customers. That is information worth testing to get!

The Bottom Line: You can get a lot out of A/B testing; you just have to put the time and energy into it that it requires.

This is a follow-up article to “Most of your AB-tests will fail” by JitBit

Back to topNo worries, download the PDF version now and enjoy your reading later...

Download PDF Miva

Miva

Miva offers a flexible and adaptable ecommerce platform that evolves with businesses and allows them to drive sales, maximize average order value, cut overhead costs, and increase revenue. Miva has been helping businesses realize their ecommerce potential for over 20 years and empowering retail, wholesale, and direct-to-consumer sellers across all industries to transform their business through ecommerce.

Visit Website